Despite the wide array of information systems available for biopharmaceutical manufacturing, we need to adopt a “follow-the-workflow” approach to overcome the process inefficiencies generated by quality and production silos.

For the better part of thirty years, a critical component of most biopharmaceutical manufacturers’ quality program has been information systems to assist production and quality professionals with capturing, processing, and analyzing data associated with their product batches and to comply with regulatory guidelines.

As technology has advanced, the number of systems in the typical GMP organization has grown, along with their features and functions. However, significant productivity and compliance gaps remain.

Two issues in particular have persisted, and in fact, have been made worse by the increasing footprint and capabilities of these information systems. The first challenge is moving and processing information as part of the production workflow, and the second challenge is compiling, reviewing, and approving this information for final creation of the batch record and product release. Production and quality technology innovation, as well as demands for scale and cost-efficiency in biotherapeutic production, are creating new pressures and opportunities for electronic systems to truly improve time, quality, and compliance of product release. But a new perspective on choosing, implementing, and prioritizing these IT investments is needed to meet the challenge.

Many Information Systems, But the Same Inefficiencies

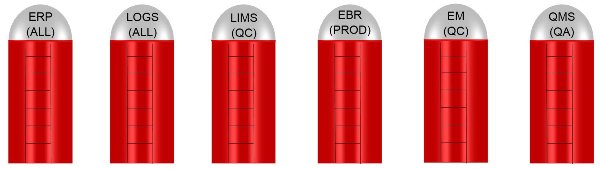

Currently, there are plenty of systems available to store and report batch record data. These include: LIMS to obtain quality control results and LMS for training records, equipment calibration systems, document management systems for storing and retrieving SOPs, as well as SCADA and data historians for data acquisition and monitoring production equipment. On top of these, MES is used for process and production equipment control, ERP for resource planning and materials management, CAPA systems to monitor and control deviations, and more recently, electronic batch record systems for final production of the (partially) complete batch record.

And yet, with all of these resources at our disposal, the same inefficiencies remain. A recent survey conducted by our research partner found that more than two thirds of GMP biopharma manufacturers are using a combination of software, paper, and spreadsheets to manage their batch records, and that 96% have a batch recording error rate of 20% or more. As a result, it takes as many hours to review, reconcile, and correct batch record data as it does to record it! Quality assurance then has a Herculean task to compile production and quality information from multiple sources, review paperwork for errors and compliance, and manage the CAPA process to finally approve the batch record. Our research indicates this is consistently adding 10–12 days to product release.

And yet, with all of these resources at our disposal, the same inefficiencies remain. A recent survey conducted by our research partner found that more than two thirds of GMP biopharma manufacturers are using a combination of software, paper, and spreadsheets to manage their batch records, and that 96% have a batch recording error rate of 20% or more. As a result, it takes as many hours to review, reconcile, and correct batch record data as it does to record it! Quality assurance then has a Herculean task to compile production and quality information from multiple sources, review paperwork for errors and compliance, and manage the CAPA process to finally approve the batch record. Our research indicates this is consistently adding 10–12 days to product release.

A major challenge is that IT investments are made in a fragmented way, with systems chosen for playing a specific role in the overall process. Selecting one system to do everything usually results in nothing being done well, but implementing the right tool for each job without looking at how that tool fits into the whole production and quality control workflow for a batch, will only meet half of our needs.

Even with a full complement of best practice systems, most biopharma organizations today still segregate quality and production systems, causing silos of data in different systems and devices that do not communicate with one another. Consequently, they then have to fill the gaps with paper and spreadsheets, with personnel moving and correcting data from one process step to another. It’s a potential recipe for errors and delayed product release. So, what’s needed is a new approach for implementing, maintaining, and integrating our information systems.

Information System Optimization: What’s the Solution?

We need to look at our systems in the context of the workflow we actually perform when producing a batch. Of course, this starts with our SOPs, but far too often the linear nature of these documents fails to reflect the way we practice production and quality control. Instead, we need to take a step back and use a “follow-the work flow” approach with our digital investments, looking at each step in our process with the following principles in mind:

- Data integrity – We need to do much more than just making sure our device data is being transferred to a validated database. Ensuring data integrity means we can confidently say for each step of our process that the data is being captured to complement how we are actually working and/or testing. Data integrity is the confidence that the information is visible and useful, helping to improve accuracy in the next step of the process, as well as reducing or eliminating reviews at the end.

- Integration – This involves looking at the information from our production and quality systems together in the context of each major step in batch production, and then prioritizing integration and validation of these systems and devices depending on their impact on right-first-time rates and time to release.

- On-demand review and analytics – We have plenty of data available but little ability to deliver information that is timely and actionable to our production and quality control staff, let alone data that will immediately prevent deviations and continuously improve our process. We need validated, visual, timely, and actionable analytics based on the role of each person in the process, designed for the way they use this information on a daily basis.

- Flexibility and repeatability – Aligning our information technology portfolio with our batch workflow is only as useful as its flexibility to change and improve as our production and testing processes evolve. This is especially true for the number of complex biotherapeutics and biosimilars currently in clinical manufacturing and scale-up.Workflow modeling tools based on Quality-by-Design principles and execution systems that bridge the gap between process steps and islands of digital information, allow us to adapt, deploy, and validate system changes quickly and efficiently, building on previous usability and integration improvements. We’ll know that we’re succeeding with a flexible, repeatable system portfolio when batch review by exception becomes the norm for quality assurance, and our time to release decreases as our processes improve.

At Lonza, we developed the MODA™ Platform with a “follow the- workflow” approach to meet all of these principles. The MODA™ Solution enables paperless, realtime data capture, as well as on demand tracking and reporting.

At Lonza, we developed the MODA™ Platform with a “follow the- workflow” approach to meet all of these principles. The MODA™ Solution enables paperless, realtime data capture, as well as on demand tracking and reporting.

MODA™ also improves data integrity by providing full audit trails across the data lifecycle, and can be seamlessly integrated with other information systems.

Conclusion

With time-sensitive biotherapeutics and mainstream use of personalized and autologous therapies on the horizon, we need to be better at managing and capitalizing on our information for the sake of the patients who depend on us to deliver safe and effective products. The opportunity is there to improve, and it starts by expecting an enhanced user experience and better results from our information technology investments. Improving the way we invest in and implement advanced digital solutions will support the continued advancement of biopharmaceutical manufacturing, leading to higher quality, cost-effective therapies, and ultimately better patient health.

![Sirio Launches Global Research Institute for Longevity Studies [SIA]](https://www.worldpharmatoday.com/wp-content/uploads/2019/09/Sirio-218x150.jpg)